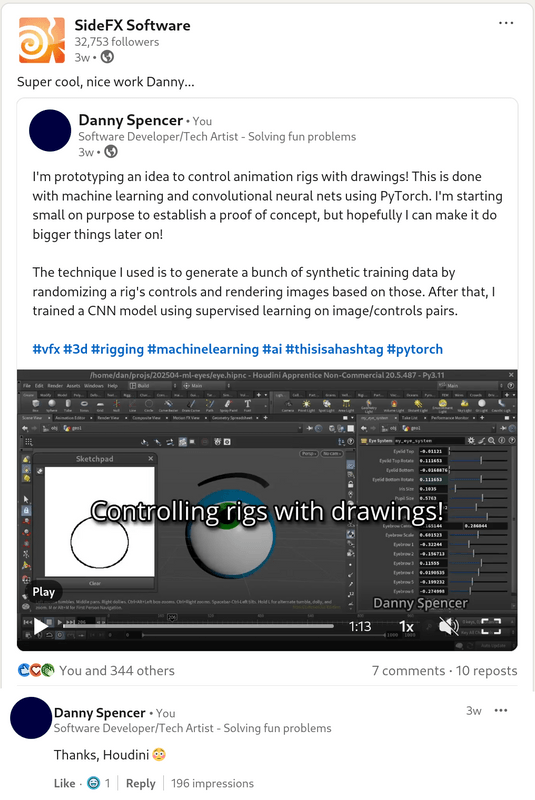

In May, I made a post on LinkedIn prototyping an idea to draw rig poses. I didn't expect it to get the attention it did!

But no, seriously, I didn't expect the attention

Figure 1.1: DCC-senpai noticing me

Well, I expect it's probably something to do with the recent AI spring, and the enormous amount of attention/money AI is getting right now.

I'm not extremely pro or anti AI either, but I know people tend to feel strongly about it (my opinions are more about how it's governed than the tech itself). I got extremely positive responses for this, including from industry folks who don't like GenAI that much.

The stuff I put out usually gets what, 20 likes? The last thing I made that went viral was the Doom calculator I designed using Clojure. But that one didn't result in recruiters InMailing me!

What inspired the idea?

I was playing around with those live sketch apps where you can draw a low-fidelity sketch, and it'll create a high-fidelity Generative AI version of it. Unfortunately, they're usually crud and terrible at emoting (as of writing in 2025).

More than anything, I was disappointed with how unstable the output was. Like, if I started drawing a cat, it'd turn from a Siamese cat into a Calico cat if I added more to the drawing. This is a common problem with diffusion-based GenAI - even if you keep the seed constant.

So my thought was "man it'd be much more scalable if you had an underlying 3D geometry of something and just control that." ... Hey, what if...

At a high level

Here's a numbered list, to keep things concise:

- Create the rig

- For each separate part of the rig (e.g. eyeball, eyebrow):

- Generate a bunch of synthesized training data.

- Render it, apply edge detection if needed (e.g. using the Sobel or Canny algorithms)

- Write a bespoke framework in Python to randomly merge datasets

- Implement the ML model architecture in PyTorch

- Train!

- Vibe-code

*a Qt-based UI to quicky demo it to the world

* With a lot of modifications, because GPT 4o and Claude still mess up a lot. And that was a simple drawing window!

The tech

PyTorch and Houdini. That's it! (and OpenUSD, OpenCV, PySide, and lots of bash scripting...)

Well, the more interesting part is the methodology.

As I alluded in the video, I generated a bunch of sample images from the rig itself.

I took the parameters from the HDA in Houdini, wrote a Python script to randomize them for about 1000 or so frames.

Those frames were exported using the USD ROP, rendered using usdrecord:

usdrecord --camera '/cameras/maincam' --frames $FROM_FRAME:$TO_FRAME --imageWidth 256 geo/eye.usda render/eye###.png

After that, I applied edge detection using the Canny edge detection feature in OpenCV, followed by dilating the edges to make it as thick as one would draw them.

Note: OpenCV is a must-know for stuff like this!

Model architecture

The very, very basic idea is to take a 256x256 tensor of grayscale pixels, forward-propagate it through a convolutional neural net (CNN), and output the 14 or so parameters needed to animate the rig.

The exact architecture would change based on requirements, but this is the specific one I used in my example:

input (1 x 256 x 256)

|

v

Conv2d(1, 16, 7) (16 x 256 x 256)

LeakyReLU(0.01) (16 x 256 x 256)

MaxPool2d(4) (16 x 64 x 64)

|

v

Conv2d(16, 32, 3) (32 x 64 x 64)

LeakyReLU(0.01) (32 x 64 x 64)

MaxPool2d(2) (32 x 32 x 32)

|

v

..... (repeat the Conv2d/LeakyReLU/MaxPool2d layers 3 more times)

|

v

convolution output (256 x 4 x 4)

|

v

Linear (128)

ReLU

Dropout

Linear (num_parameters)

|

v

output (num_parameters)

For the eyeball, I actually have three separate models:

- Classifier (is an eyebrow drawn?)

- Eyebrow (points on the NURBs curve representing the brow)

- Eyeball (gaze vector, eyelid positions)

Will I open-source it?

I want to refine this idea a bit more with more advanced concepts, but I probably will at some point once I'm satisfied with it. The process is a bit messy, so it'll take a few iterations.